I’ve always found that my learning accelerates when I have a genuine need or desire to know something. When it comes to AI, I’ve been intrigued, but connecting it to my personal interests felt like a stretch. At work, we’re diving into AI, but its correlation with my focus on cloud computing is still somewhat undefined. As for personal interests, I struggled to envision how AI could enhance my passion for music, focusing more on practical applications for customers rather than my own pursuits.

Recently, something sparked my interest in leveraging AI to delve deeper into music. Music has been a significant part of my life from grade school through college, but as life shifted gears with marriage, kids, and chauffeuring duties, my creative energy for music waned.

Fast forward to this week. I’ve had a few melodies lingering in my mind since high school, but the time and inspiration to expand on them never seemed to align. So, I embarked on exploring the intersection of AI and music.

This journey, which I plan to share over several posts, began with a simple idea but quickly evolved into a series of challenges and discoveries. Here’s a brief summary:

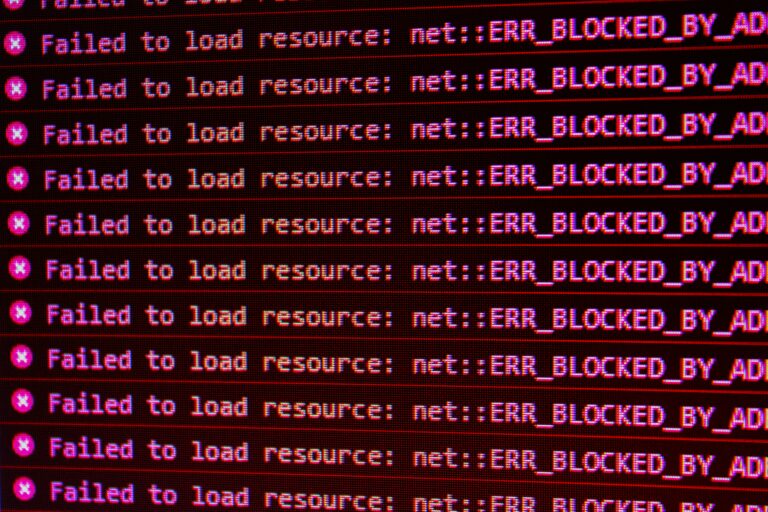

- I found an adapter for my iPhone to connect an old USB MIDI cord but had to search for an app capable of recording it.

- While I understood MIDI as a means of electronically capturing music, I realized I lacked knowledge about its format beyond mere recordings.

- I imported the MIDI file into an app on my old Mac for editing but soon realized that I wanted AI to assist with the creative process.

- Research led me to tools that could import MIDI files, but the process wasn’t straightforward.

- Eventually, I stumbled upon using ChatGPT to manipulate MIDI files but had to convert them to ABC format first, then back to MIDI.

- I also needed another app to import, enhance, and export the MIDI file into a playable format.

This condensed summary doesn’t capture all the starts and stops, but it was undoubtedly a learning experience.

The “before” clip in the attached audio is a raw snippet of me playing the medley I’ve been tinkering with for over 30 years. The “after” clip, however, is the result of ChatGPT’s intervention, followed by remixing with fadr.com and exporting via Staccato.ai into a WAV file.

The resulting composition, while not polished enough for public release, incorporates vocals, French horn, drums, trumpet, and other elements—none of which I played myself. ChatGPT contributed significantly by adding complementary changes to the melody, albeit with mixed results.

In less than five hours, this experiment yielded a new, enhanced version of the melody, showcasing the potential of available tools. However, it also underscored the need for further knowledge and experience to fully harness these tools’ capabilities.

Part 2 will focus on a deeper dive into tools that can help expand on this, as well as my ability to navigate them. I came across a very promising Musenet app created by OpenAI a few years ago, but it has since been retired. I’m planning to explore some of the open-source recreations of that tool to see how running locally might open up new options. However, before diving in, I need to refresh my knowledge on GitHub and deploy a few of the solutions to explore their capabilities.

The world is vast, and the world of technology even more so. By many accounts, it’s limitless. So why not look for an interest or passion to explore and gain knowledge?

Have a great weekend!

Jack

My melody before help from AI –

My melody after help from AI –

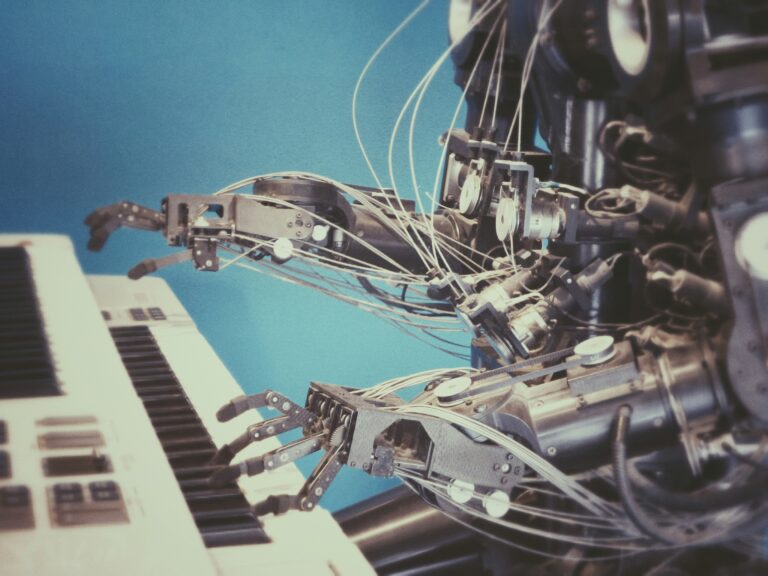

Photo by Possessed Photography on Unsplash